- Anybody Can AI

- Posts

- Tesla Plans “Terafab” to Build Its Own AI Chips

Tesla Plans “Terafab” to Build Its Own AI Chips

PLUS: Apple to Pay Google ~$1 B/year for Gemini-Powered Siri Overhaul

Elon Musk Eyes a Giant AI Chip Factory, Hinting at Intel Collaboration

Elon Musk is taking Tesla’s AI ambitions to new heights - or new wafer counts. The company is considering building a “terafab”, an enormous AI chip factory that could dwarf existing Gigafactories. The move signals Musk’s push for vertical integration in AI hardware, as Tesla aims to control everything from data collection to chip production - and possibly rope in Intel as a partner.

Key Points:

“Terafab” Scale Manufacturing - Musk says Tesla may build a chip fab capable of producing 100,000 wafer starts per month, far exceeding current suppliers like TSMC and Samsung.

Intel Partnership on the Table - Musk called it “probably worth having discussions” with Intel about collaboration, briefly boosting Intel’s stock as the company seeks more foundry customers.

Next-Gen AI Chips (AI5 & AI6) - Tesla’s in-house AI5 chip aims to use ⅓ the power and cost 10% of Nvidia’s Blackwell, with mass production expected by 2027 and its successor, AI6, doubling performance by 2028.

This not only reduces dependency on TSMC or Nvidia but could also reshape the U.S. chip landscape if Intel joins in. For AI development, it means faster iteration, lower cost per compute, and more autonomy over the most strategic layer of the AI stack: silicon.

Chinese Startup Moonshot AI’s Kimi K2 Surpasses GPT-4.1 & Claude

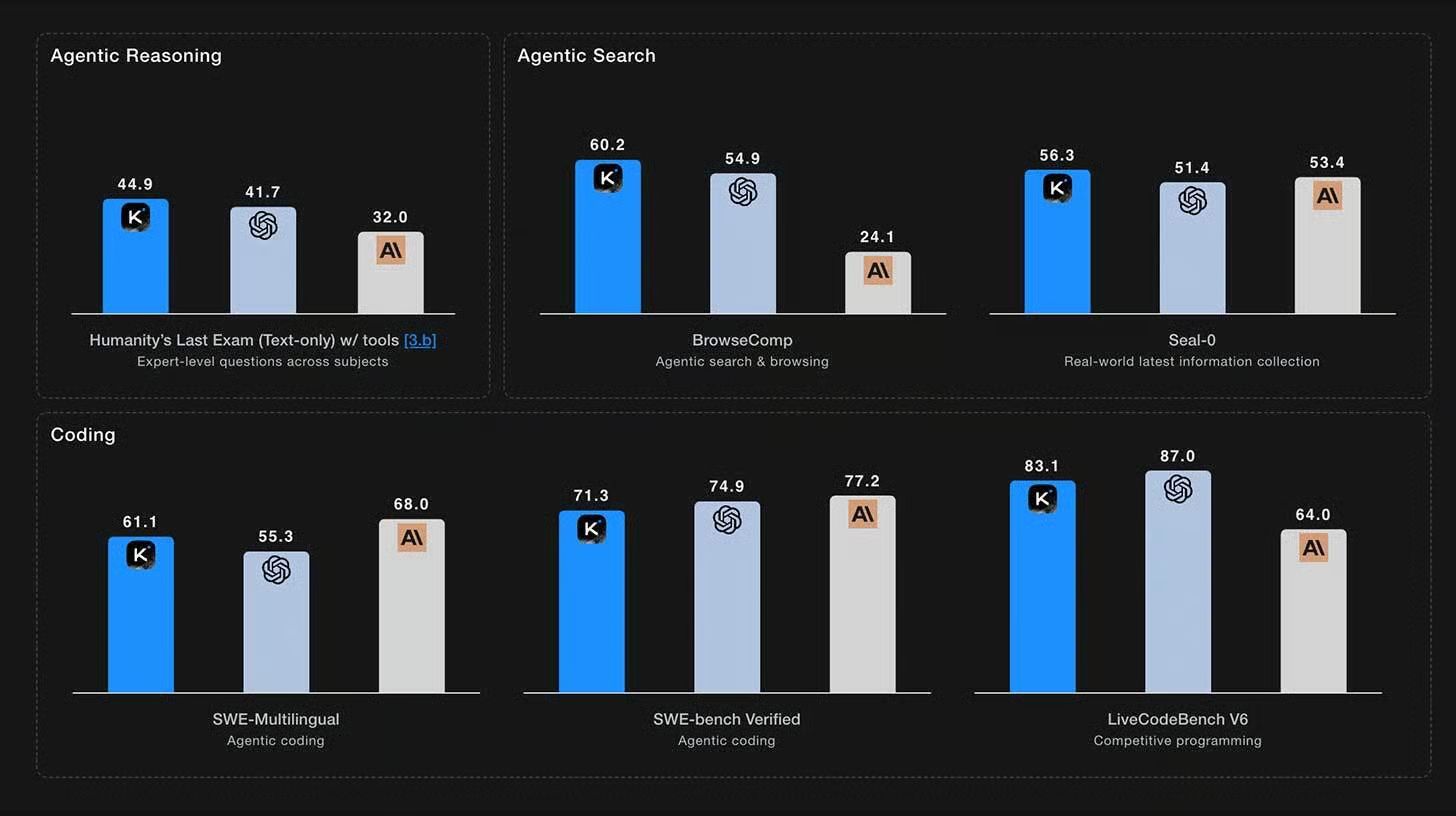

Beijing-based Moonshot AI has released its latest large language model, Kimi K2, claiming open-source leadership with a mixture-of-experts design featuring 1 trillion total parameters and ~32 billion active per inference. The model is positioned to rival top commercial models such as GPT‑4.1 and Claude Opus 4 in coding, reasoning and agentic tasks, marking a notable shift in the global AI landscape.

Key Points:

Massive MoE Architecture - Kimi K2 employs a mixture-of-experts (MoE) architecture with 1 trillion total parameters but only 32 billion active during inference, enabling high capacity while managing compute cost.

Top Benchmark Performance - Independent sources report K2 outperforming earlier open models and rival commercial ones in coding and reasoning. For example, analysts show strong scores on LiveCodeBench, SWE-bench and other tasks.

Open Weight + Agentic Focus - Released under open-weights with a modified MIT-style license, and optimized for “agentic intelligence” (tool-use, reasoning chains, autonomous workflows), Kimi K2 signals a move from chat-only LLMs to models that act.

When a Chinese startup openly releases a trillion-parameter model aiming to compete with Western giants, the dynamics of model ownership, openness, cost, and global access shift. Developers now have access to frontier-tier weights, which could accelerate innovation, reduce dependency on closed models, and reshape the competitive landscape. On the flip side, it raises questions about safety, governance, trust, and how “open” translates into real-world impact.

Lumonus Raises A$25 M to Scale AI-Powered Oncology Workflows

Australian startup Lumonus has closed a A$25 million Series B round to expand its AI-driven oncology workflow platform globally, targeting health systems in the U.S., Australia and Europe.

Key Points:

Series B raise of A$25 million - The funding round was led by Aviron Investment Management, with participation from Oncology Ventures.

Platform traction: 280 000+ treatments & 75 000+ plans - Lumonus’ AI platform has supported clinicians in consulting and prescribing over 280,000 cancer treatments and automated more than 75,000 treatment plans.

Growth-focused use of proceeds - The new capital will help expand the U.S. go-to-market and clinical success teams, advance key products (Lumonus AI Physician & Lumonus AI Dosimetry), and invest in clinical informatics to harness real-world evidence for personalized care.

This aims to enhance consistency, speed and personalization in one of the most complex treatment domains. As cancer care becomes more data- and model-driven, platforms that bridge innovation and delivery will be key. This raises important opportunities (better outcomes, broader access) and prompts questions around safety, regulatory validation, bias in models, and deployment in high-stakes environments.

Microsoft Unveils “Humanist Superintelligence” Initiative

Microsoft has announced a new strategic push in AI, establishing the MAI Superintelligence Team under the leadership of Mustafa Suleyman (CEO of Microsoft AI). The initiative centres on what Microsoft calls “Humanist Superintelligence” (HSI) advanced AI systems designed not to dominate but to serve humanity, keeping humans “at the centre of the picture.”

Key Points:

Human-Centred Superintelligence - HSI is defined by Microsoft as “incredibly advanced AI capabilities that always work for, in service of, people and humanity more generally.” It emphasises AI with specific, domain-focused purpose, rather than an unbounded system with full autonomy.

New Team & Research Focus - By forming the MAI Superintelligence Team, Microsoft is committing to research and build models that are “grounded and controllable,” rejecting narratives of a race to AGI for its own sake.

Application Domains Highlighted - Microsoft mentions three early domains for HSI: AI companions for individuals, medical superintelligence for diagnostics & treatment, and clean energy breakthroughs (e.g., battery storage, carbon removal).

Microsoft is signalling that the next front in AI is not just bigger models, but better aligned models - ones where purpose, control, and human value are integral, not after-thoughts. As conversations around AI risk, governance, and value intensify, Microsoft’s articulation of “humanist superintelligence” offers a blueprint (or at least a framing) for how large tech players aim to steer the future. Whether it becomes a genuine differentiator or a branding gloss remains to be seen - but it’s a development worth watching.

Apple Turns to Google’s 1.2 Trillion-Parameter Gemini Model

Apple Inc. is reportedly nearing a deal with Google LLC to license a custom version of Google’s Gemini AI model - believed to have 1.2 trillion parameters - which will power a major upgrade to Apple’s Siri voice assistant. The deal is said to involve Apple paying around $1 billion per year for access to the technology.

Key Points:

$1 billion/year access fee - Apple will pay Google about $1 billion annually for a custom Gemini model to handle core Siri functions.

Massive model scale (1.2 trillion parameters) - The Gemini version Apple will use is reportedly far larger than Apple’s current internal models (≈150 billion parameters).

Bridge strategy until in-house AI ready - Apple’s reliance on Google is described as interim: the company still plans to build its own large-scale models, but will use Gemini as a stop-gap to catch up in AI assistant capabilities.

Apple, long known for owning its hardware + software stack, is partnering externally to accelerate its AI offering. It highlights how model scale, access and partnerships are becoming key strategic levers - especially in assistants and embedded AI. It also raises broader questions:

What does it mean when a competitor supplies your core AI engine?

How will data, privacy and model ownership be handled?

How sustainable is this bridge strategy when everyone else (including Apple) aims to build persistent internal AI infrastructure?

Thankyou for reading.